In a democratic world, elections are a period of vulnerability in the information sphere. This time is characterized by the acceleration of socially and politically significant issues, making external manipulative influences particularly prominent. The toolkit of malicious international actors has significantly expanded due to the advancements in artificial intelligence (AI).

The German parliamentary elections on February 23 were marked by an unprecedented level of external information interference. One of the most debated topics in Western media was the U.S. administration’s open support for the right-wing populist party Alternative for Germany (AfD). While U.S. Vice President J.D. Vance and Director of the Department of Government Efficiency (DOGE) Elon Musk openly expressed their support for AfD, it is crucial not to overlook the ongoing influence of Russia’s propaganda machine on Western European audiences.

Previously, the Hybrid Warfare Analytical Group (HWAG) documented Russia’s use of AI to orchestrate disinformation campaigns against Ukraine and the West. This study presents documented attempts of Russian external interference in the German elections using AI, aiming to influence the final outcome. had documented Russia’s use of AI to orchestrate disinformation campaigns against Ukraine and the West. This study presents documented attempts of Russian external interference in the German elections using AI, aiming to influence the final outcome.

Kremlin’s Lost Bet

The Bundestag elections resulted in five parties securing seats: the “Union” (CDU/CSU), Alternative for Germany (AfD), the Social Democratic Party of Germany (SPD), the Greens, and the Left Party. The election reflected a noticeable shift of the German electorate toward right-wing populism, with AfD securing a solid foothold in this political segment, finishing second with 20.8% of the vote.

AfD has long been sympathetic to Moscow, advocating for the restoration of Russian energy supplies and the cessation of support for Ukraine. Kremlin propaganda reciprocates by amplifying the voices of far-right “Putinverstehers” and deploying bot armies to bolster AfD’s influence.

However, despite the ultra-right’s success, there was no champagne uncorked in the Kremlin after the polls closed as Moscow’s promising left-populist asset – Sahra Wagenknecht Alliance (BSW) – failed to pass the 5% threshold, missing out on Bundestag representation.

As a result, the seat distribution allows the two “people’s parties” – CDU/CSU and SPD – to form a bipartisan “Grand Coalition.” Even if the Greens, who were part of the previous “traffic light” coalition, are left out of the new majority, their votes will still ensure continued support for Berlin’s pro-Ukraine policy in the coming years.

The only consolation prize for Moscow is the “blocking minority” secured by far-right and far-left forces. Preliminary counts indicate that AfD and the Left Party (Die Linke) will control over 210 seats, which could complicate efforts to increase Germany’s defense budget and military aid to Ukraine. Notably, Left Party leaders have already stated that they would support reforms to Germany’s “debt brake” (a mechanism limiting budget deficits to 0.35% of GDP) only if the additional funds are not allocated to defense spending.

Rules not for everyone

Ahead of the elections, Germany’s Ministry of the Interior (BMI) issued a warning about foreign interference. According to official data, nearly 90% of German voters expressed concerns about manipulations by foreign actors.

Polls indicated that: 45% of Germans identified Russia as the main threat, 42% pointed to the United States, and 26% named China. Additionally, more than half of respondents believe their country is unprepared to combat deepfakes and digital manipulations.

In response to the growing public fears over external hybrid influences, Germany’s major “systemic” parties – CDU/CSU, SPD, FDP, the Greens, and Die Linke – signed Fairness Pact for Bundestag Elections. The signatory parties committed to: Refraining from personal attacks and derogatory remarks about opponents’ private or professional lives, Avoiding the use of misleading information, even if generated by third parties, Opposing fake accounts, ensuring that political messages were clearly labeled with party logos, and Marking campaign content created with AI accordingly.

Notably, AfD and BSW refused to participate in the agreement, rejecting measures aimed at ensuring a fair and transparent information environment in the electoral competition.

(Photo deutschlandfunk.de)

AI-Driven Disinformation Operations: The Battlefield – Germany

Russia’s influence on Germany’s political landscape is growing with each electoral cycle. Since severing ties with Western political circles in 2022, the Kremlin has been deploying a wide range of advanced influence technologies against European societies. At the forefront of these efforts is the use of AI to generate politically manipulative content.

The Hybrid Warfare Analytical Group has identified several examples of Russian public opinion manipulation campaigns dating back to 2022-2024. These examples represent the “preparatory stage”, which preceded a full-scale information attack during Germany’s recent Bundestag election campaign.

Operation “Doppelgänger”. A long-running Russian information operation aimed at discrediting German media outlets, including Spiegel, Welt, and Süddeutsche Zeitung. The disinformation campaign was first documented in 2022 by the European organization EUDisinfoLab and the social media giant Meta.

The primary goal of Doppelgänger was to spread fake narratives about Ukraine, Western military aid, and Germany’s social issues. AI was extensively used to generate fake news articles, social media posts, and automated comments that fueled societal tensions and public debates.

Investigations uncovered over 50,000 fake social media accounts actively promoting anti-Ukrainian narratives and undermining trust in the German government. According to official sources, as of February this year, more than 100 fake German-language news websites had been identified.

Cloned web pages of Der Spiegel (left) and Die Welt (right)

(Source: URLscan.io)

Fabricated Sex Scandals Involving Green Party Leaders. In the summer of 2024, a deepfake video emerged featuring a Nigerian “escort boy” falsely claiming that Foreign Minister Annalena Baerbock was his client. Later, in late 2024, another deepfake video falsely accused Economy Minister Robert Habeck of sexual assault.

Disinformation researchers link both incidents to Russian influence campaigns. According to the Hybrid Threat Analysis Group, the orchestrators of these media attacks aimed to damage the personal reputations of key government officials. The sex scandal narrative was deliberately chosen to plant fabricated stories in tabloid media, maximizing public reach and engagement.

Deepfake Video Featuring Olaf Scholz. In the fall of 2023, a deepfake video surfaced online in which German Chancellor Olaf Scholz appeared to call for a ban on the far-right party AfD. The video was based on a real speech by Scholz, but his voice was synthetically altered, and the text was modified.

The fake video was created by the artistic group “Center for Political Beauty” (ZPS), an organization that describes its work as a “radical form of humanism”. Artists deliberately create projects that “initiate dialogue with society, annoy and derail”. It is important to note that ZPS is not affiliated with Russia – in fact, in 2021, its members actively disrupted the distribution of millions of AfD campaign leaflets.

This case highlights an example of a fake narrative initially created by a third party (unrelated to Russia) but later exploited by Russian bot networks due to its alignment with Kremlin-backed discourse. Such appropriation often distorts the original intent of the media product. In the case of the Scholz deepfake, its original grotesque-provocative artistic form was replaced by a misleading narrative portraying the German government as anti-democratic.

on February 24, 2022

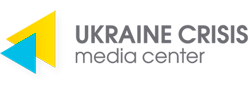

Far-Right AI Propaganda During the European Parliament Elections. Ahead of the 2024 European Parliament elections, German far-right parties actively used AI-generated images, posters, and audio content to promote narratives related to migration, LGBTQ+ issues, and “national traditions.” These materials were not labeled as AI-generated, violating EU regulatory rules and contributing to the spread of political disinformation.

Spreading Manipulative Content Through Pranksters. Russian propagandists have repeatedly used deepfake technology in prank calls targeting European officials and politicians, including Germany’s Economy Minister Robert Habeck. These calls were designed to extract compromising or politically sensitive statements, which were then amplified by Russian media outlets.

According to a recent study by the Media Program of the Konrad Adenauer Foundation, most of these incidents can be traced back to Russian actors. While Russia was not always directly responsible for creating the content, it played a key role in spreading manipulative materials through social media using fake accounts.

Attacked Elections

The peak of information influence operations targeting German society predictably occurred at the beginning of this year, coinciding with the final stage of the election campaign.

The Institute for Strategic Dialogue (a network of European independent organizations focused on human rights, counter-extremism, and disinformation) uncovered a coordinated network on X spreading disinformation about German politicians and terrorist threats in the context of the elections. This network consisted of approximately 50 bot accounts. Researchers noted that its algorithmic patterns resembled Russia’s information operation “Overload.”

Launched in early 2024, the Overload campaign was built around a tactic of cloning websites that mimicked major European media outlets. According to the Finnish fact-checking platform Check First, “a key feature of this operation is the mass distribution of anonymous emails to media organizations, containing links to fake content and anti-Ukrainian narratives, particularly targeting France and Germany.”

Meanwhile, participants in the Gnida project, in collaboration with Correctiv and NewsGuard, identified over a hundred German-language websites initially filled with pro-Russian AI-generated content. Later, these websites began publishing false reports, which were then disseminated on social media platforms like X and Telegram – either by “friends” of the operation or paid influencers.

In the lead-up to the election, Russia attempted to discredit traditional news sources and create an atmosphere of distrust among German voters.

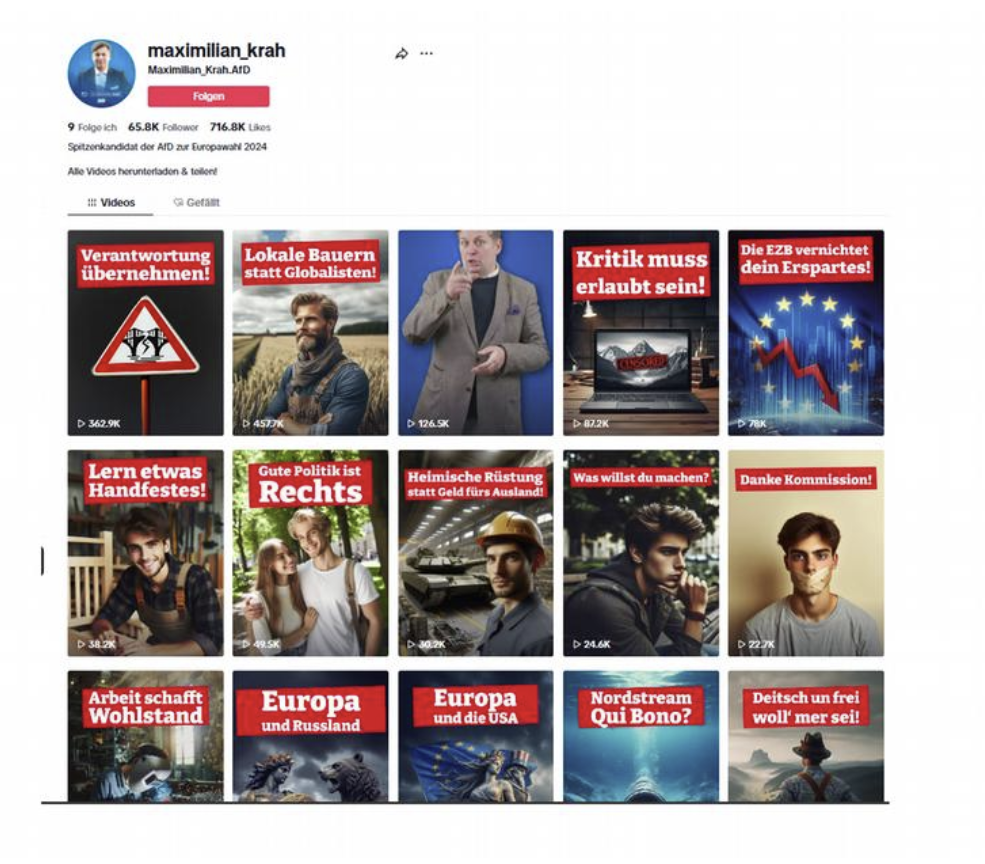

Right: Another manipulated video falsely alleging that British intelligence (MI6) warned against traveling to Germany ahead of the elections. (Source: isdglobal.org)

Another network of internet bots, recorded by the Institute for Strategic Dialogue, consists of over 6,000 accounts. Its primary goal was the dissemination of deepfake videos.

Initially, from November 2024, the network focused on the topic of “rising antisemitism” in Germany. However, from January 10, 2025, it shifted its focus to the elections, publishing false accusations against German politicians, including claims of corruption and pedophilia. The primary targets were: CDU chancellor candidate Friedrich Merz, Janine Wissler (Die Linke), and Former CDU leader Armin Laschet.

The network’s videos falsely used the logos of legitimate media outlets, including Deutsche Welle, BBC, and Sky News. Since early 2025, the operation forged content from at least 20 organizations using AI. Additionally, the network was used by Russia to spread fake videos falsely claiming that ballots for AfD were shredded.

Notably, the videos were published in different languages – primarily English, Spanish, and Arabic – but not German. This suggests that the campaign’s goal was not just domestic disinformation but rather to discredit Germany’s elections on the international stage. Such efforts help lay the groundwork for future Kremlin-backed campaigns aimed at undermining trust in the German government in third countries.

The “Favorite” Target of Russian Disinformation

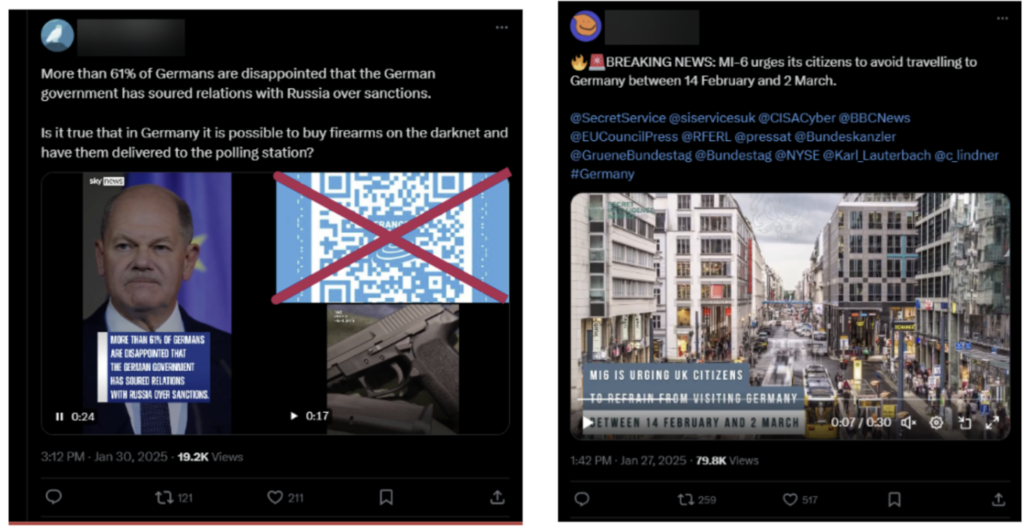

Ahead of the elections, one of the main targets of pro-Russian disinformation campaigns was Friedrich Merz, the leader of the German opposition and a frontrunner for the chancellorship. In the German social media space, fabricated statements falsely claiming that Merz intended to introduce harsh austerity measures were widely circulated.

Perhaps the most damaging attack against Merz was the publication of falsified claims about a “suicide attempt” in 2017. A viral video alleged that Friedrich Merz was mentally unstable and had undergone treatment at a hospital. However, the Karolinen Hospital, which was referenced in the disinformation, denied these claims, stating that the doctor mentioned in the fake video does not exist. Additionally, the medical document claiming Merz’s hospitalization was exposed as a crudely forged fake.

Fragmented Response

The recent parliamentary elections in Germany have demonstrated that Europe’s leading nations need to rethink their strategies for combating disinformation, especially in the face of rapid advancements in AI-driven technologies.

According to official reports, the German government has intensified its efforts to counter foreign influence, working closely with EU cybersecurity agencies. The Federal Office for Information Security (BSI) has launched real-time monitoring of online narratives and awareness campaigns to help voters recognize fake news. Additionally, law enforcement agencies are investigating networks involved in orchestrating disinformation attacks.

However, some disinformation experts argue that Germany’s countermeasures remain insufficient. According to Mina Trpkovic, a research fellow at the Frankfurt Institute for Peace Research, Germany’s approach to disinformation remains fragmented. “This only underscores the urgent – and, given recent events, long-overdue – need for a comprehensive strategy aimed at better understanding and countering disinformation challenges, both during and outside election periods. While various government agencies monitor and combat foreign influence, there is still no single centralized strategy,” Trpkovic concludes.

The German Bundestag election campaign has highlighted the growing role of AI in external information operations. Russia is leveraging advanced communication technologies to manipulate public opinion in European countries and undermine trust in elections as a cornerstone of democracy. At the same time, the Kremlin is pursuing additional tactical objectives – discrediting its political opponents and authoritative Western media in the eyes of European audiences.

The AI-powered information interference campaigns targeting European elections underscore the urgent need for a rapid response from governments and security agencies. However, as of today, efforts to coordinate a Europe-wide response to disinformation are hampered by a lack of transatlantic unity. As a result, a more effective approach may be for like-minded European nations to collaborate in addressing the risks posed by international disinformation.

The technological evolution of influence campaigns, increased election interference, and the growing alignment between external disinformation actors and Euroskeptic forces within the EU all demand a shift in Europe’s approach – from a peacetime mindset to a state of heightened preparedness.

By Volodymyr Solovian